PrivateGPT v0.3.0

Feb 14, 2024

PrivateGPT, the open-source project maintained by our team at Zylon, has just released a new version, v0.3.0. This update brings a host of new features, improvements, and bug fixes to make your experience even better.

To get started with PrivateGPT v0.3.0, make sure to pull the labeled commit and install the new dependencies:

poetry install --with ui,local

Let's dive into the details.

Features 🌟

UI Enhancements:

PrivateGPT v0.3.0 introduces several UI improvements to make your interaction with the platform more enjoyable and efficient. Here's what's new:

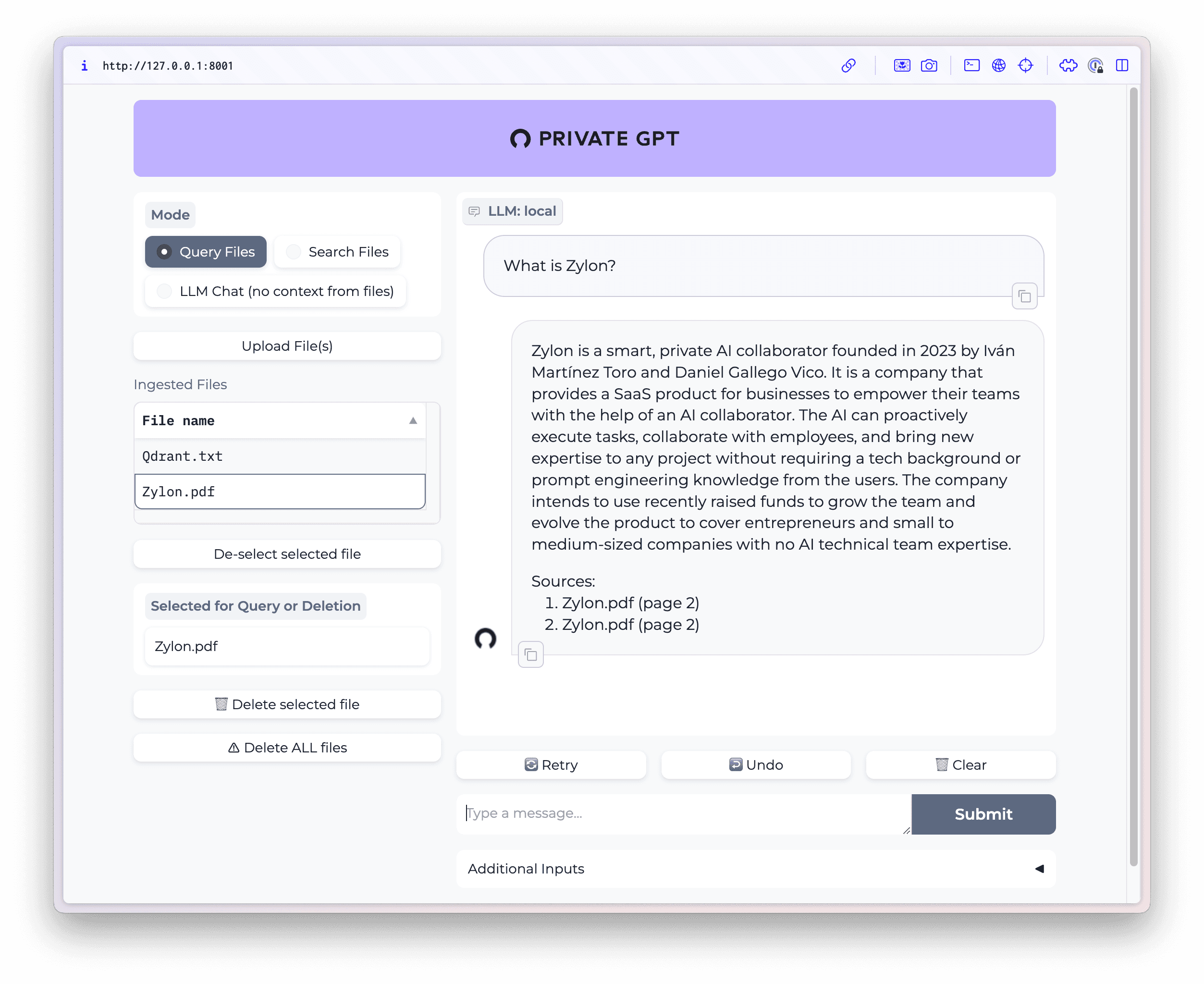

Select the file to query: Now, you can choose the specific file you want to query instead of having to ingest all files in a directory.

Delete selected file: Long-awaited feature! You can now delete individual files from the UI.

Delete all ingested files: With a single click, you can delete all the ingested files.

Make chat area stretch: The chat area now stretches to fill the entire screen, providing a more immersive experience.

Faster UI: We've optimized the UI to free up your CPU, ensuring it doesn't become a bottleneck.

PrivateGPT v0.3.0 UI with new features

API Upgrades:

PrivateGPT v0.3.0 comes with several API improvements, including:

Allow ingesting plain text: You can now ingest plain text directly into PrivateGPT, making it even more versatile.

New Language Models: We've added support for Mistral + Chatml prompts styles, as well as openai and Ollama language models.

Configurable context_window and tokenizer: You can now configure the context_window and tokenizer settings for better inference.

Bulk-ingest:

PrivateGPT v0.3.0 introduces the --ignored flag, allowing you to exclude specific files and directories during ingestion.

Bug Fixes 🐛

Our dedicated team of contributors has worked tirelessly to address several bugs in this release, including:

LlamaCPP generation fix: We've added an LLM parameter to fix a broken generator from LlamaCPP.

Dockerfile fixes: We've resolved issues with both local and external Dockerfiles.

Minor bug in chat stream output: A minor bug in the chat stream output that caused a Python error being serialized in the output has been fixed.

Settings corrections: We've corrected several issues with settings, including multiline strings and loading test settings only when running tests.

Contributors 🤝

A big thank you to all our contributors for their incredible work in making PrivateGPT v0.3.0 a reality. Your dedication and commitment to the project are truly appreciated.

Main community contributors of this version: Robin Boone, ygalblum, smbrine, naveenk2022, icsy7867, roblg

What's Next? 🔜

We're excited to announce that there are some very interesting new features in the works, including a node SDK, new functional APIs, more integrations, and more. Stay tuned for updates!

For more information, check out the full release notes on GitHub: https://github.com/imartinez/privateGPT/releases/tag/v0.3.0

Happy PrivateGPTing! 😊